News

Can ChatGPT-like Generative Models Guarantee Factual Accuracy? On the Mistakes of Microsoft's New Bing

Recently, conversational AI models such as OpenAI's ChatGPT [1] have captured public imagination with the ability to generate high-quality written contents, hold human-like conversations, answer factual questions, and more. Armed with such potential, Microsoft and Google have announced new services [2] that combine them with traditional search engines. The new wave of conversation-powered search engines has the potential to naturally answer complex questions, summarize search results, and even serve as a creative tool. However, in doing so, the tech companies now face a greater ethical challenge to ensure that their models do not mislead users with false, ungrounded, or conflicting answers. Hence, the question naturally arises: Can ChatGPT-like models guarantee factual accuracy? In this article, we uncover several factual mistakes in Microsoft's new Bing [9] and Google's Bard [3] which suggest that they currently cannot.

Unfortunately, false expectations can lead to disastrous results. Around the same time as Microsoft's new Bing announcement, Google hastily announced a new conversational AI service named Bard. Despite the hype, expectations were quickly shattered when Bard made a factual mistake in the promotional video [14], eventually tanking Google's share price [4] by nearly 8% and wiping $100 billion off its market value. On the other hand, there has been less scrutiny regarding Microsoft's new Bing. In the demonstration video [8], we found that the new Bing recommended a rock singer as a top poet, fabricated birth and death dates, and even made up an entire summary of fiscal reports. Despite disclaimers [9] that the new Bing's responses may not always be factual, overly optimistic sentiments may inevitably lead to disillusionment. Hence, our goal is to draw attention to the factual challenges faced by conversation-powered search engines so that we may better address them in the future.

What factual mistakes did Microsoft's new Bing demonstrate?

Microsoft released the new Bing search engine powered by AI, claiming that it will revolutionize the scope of traditional search engines. Is this really the case? We dived deeper into the demonstration video [8] and examples [9], and found three main types of factual issues:

● Claims that conflict with the reference sources.

● Claims that don't exist in the reference sources.

● Claims that don't have a reference source, and are inconsistent with multiple web sources.

Fabricated numbers in financial reports: be careful when you trust the new Bing!

To our surprise, the new Bing fabricated an entire summary of the financial report in the demonstration!

When Microsoft executive Yusuf Mehdi showed the audience how to use the command "key takeaways from the page" to auto-generate a summary of the Gap Inc. 2022 Q3 Fiscal Report [10a], he received the following results:

Figure 1. Summary of the Gap Inc. fiscal report by the new Bing in Press Release.

However, upon closer examination, all the key figures in the generated summary are inaccurate. We will show excerpts from the original financial report below as validating references. According to the new Bing, the operating margin after adjustment was 5.9%, while it was actually 3.9% in the source report.

Latest

ChatGPT vs. Bard: What Can We Expect?

February 22nd 2023

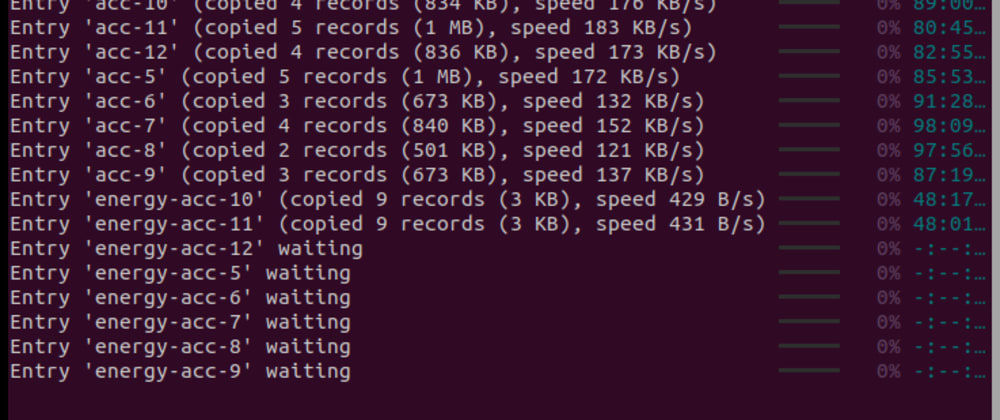

CLI Client for ReductStore v0.7.0 has been released

February 22nd 2023

Notebooks for DevOps and SRE: Open-sourcing the Fiberplane plugin system

February 22nd 2023