News

ChatGPT vs. Bard: What Can We Expect?

Big tech just dropped some big news: Google is soon releasing its own ChatGPT-like large language model (LLM) called Bard. And now many are beginning to speculate about what exactly this AI battle is going to look like. Will the newcomer Bard dominate our reigning champion, ChatGPT? Or will the incumbent conversational AI model defend its crown? It's a real Rocky vs. Creed story. But honestly it's hard to tell which AI is the main character. To each, the other is a worthy opponent.

But here's what we can expect. Here, we'll take a look at how these models were trained (pun intended) and then examine their build (again, pun intended). By the end, we might be able to determine which model is the underdog and which is the public's favorite. 🥊

Their training...

Just like boxers, AI models have to train before they can show their skills to the public. Both ChatGPT and Bard have unique training styles. Specifically, ChatGPT runs on a GPT-3.5 model while Bard runs on LaMDA.

But what does that mean?

Well, we can think of GPT-3.5 as ChatGPT's "brain" while LaMDA is Bard's. The main commonality between them is the fact that they are both built on Transformers. Now, transformers are quite a hefty topic, so if you'd like to do a deep-dive, read our definitive guide on transformers here. But for our purposes here, the only thing you have to know about transformers is that they allow these language models to "read" human writing, to pay attention to how the words in such writing relate to one another, and to predict what words will come next based on the words that came ___.

(If you can fill in the blank above, you think like a Transformer-based AI language model. Or, more properly, the model thinks like you 😉)

Okay, so we know that both ChatGPT and LaMDA have neural net "brains" that can read. But as far as we know, that's where the commonalities end. Now come the differences. Mainly, they differ in what they read.

OpenAI has been relatively secretive about what dataset GPT-3.5 was trained on. But we do know that GPT-2 and GPT-3 were both trained at least in part on The Pile---a dataset that contains multiple, complete fiction and non-fiction books, texts from Github, all of Wikipedia, StackExchange, PubMed, and much more. As a result, we can assume that GPT-3.5 has at least a little bit of The Pile within its metaphorical gears as well. And this dataset is massive, weighing in at a little over 825 gigabytes of raw text.

Check out the contents of The Pile below (source):

Latest

Can ChatGPT-like Generative Models Guarantee Factual Accuracy? On the Mistakes of Microsoft's New Bing

February 22nd 2023

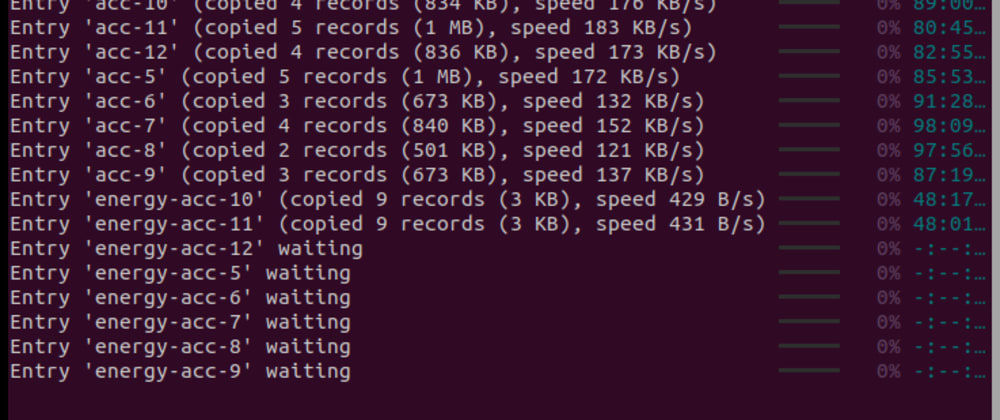

CLI Client for ReductStore v0.7.0 has been released

February 22nd 2023

Notebooks for DevOps and SRE: Open-sourcing the Fiberplane plugin system

February 22nd 2023